“Greater power brings greater responsibility” is well known to us. Today, after years of technological innovations and advancements, the advent of artificial intelligence (AI) has given immense power to the human species. But if we don’t use it responsibly and cautiously, it could eat us back.

Responsible AI is the implementation and utilization of AI in an ethical and just manner. It executes transparency, accountability, fairness and welfare of society in general and of an individual in particular. The goal of responsible AI is to reduce the potential risks and biases it brings to the users and at the same time establish privacy protection to benefit humanity rather than binding us with threats and misleading consequences.

For example, for a machine to identify a human face, we must feed several photos of human faces to the artificial intelligence of the machine. But if we feed the photos with only a particular skin tone/colour, the AI will not be able to recognize faces with other skin colour as human faces. So, when it is responsible AI, we feed photos with all kinds of skin tone so that there is no partiality, and everyone is treated with equity.

Ethical Considerations

Ethical considerations in responsible AI have the goal of aligning the AI technologies with moral principles and giving respect to human values by also considering legal aspects and enabling a positive development in society. The key aspects which it deals with are biasness, fairness, transparency, inclusivity, security, human autonomy and societal impact. Human autonomy which means ensuring that AI systems respect, preserve and enhance human decision-making rather than replacing or unduly influencing it with AI, have to be highlighted here because in recent encounters, we could note that the influence of AI in decision making, especially among the youth have dramatically increased which might result in a lot of negative effects like the inability to take decisions on one’s own.

Moreover, many reports have also suggested that AI is poised to dominate the world entirely which makes it essential to maintain human autonomy through ethical considerations in responsible AI.

For example: In a study by Jonathan Downie, it is stated that ‘the quick development of artificial intelligence (AI) and its application in interpreting have raised concern that human interpreters may become unnecessary. In particular, the promotion of interpreting AI machines and their introduction at some high-level international conferences have left the impression that machines would soon substitute humans’ (Jonathan Downie,2020).

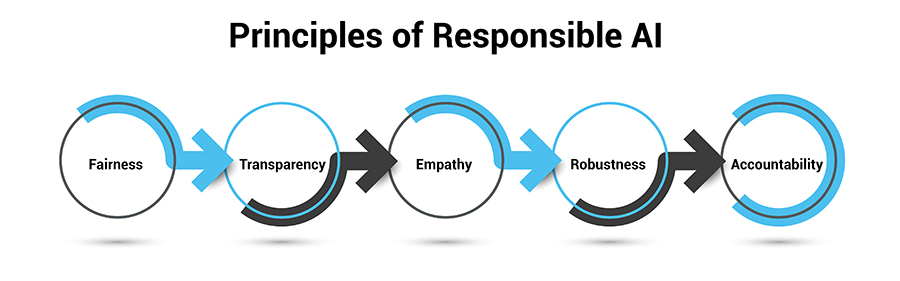

Principles and Practices

Now, in order to make sure that the AI technologies that we develop, and use are responsible, we need to have certain principles and practices in mind. It is continuously and strongly suggested by professionals to adhere to these principles which are mainly fairness, transparency, empathy, robustness and accountability. Let’s brief each one of them for better understanding.

-

01. Fairness

Fairness is guaranteeing impartial treatment of all individuals and groups by the AI technological systems. This can be practiced by consistently evaluating and rectifying potential biases in AI algorithms to prevent discriminatory results. -

02. Transparency

Through transparency, we aim for openness and transparency throughout the creation and implementation of AI systems. Most importantly it involves providing stakeholders, including users, developers, and the public, with a clear understanding of how AI algorithm's function and make decisions. In practice, it is achieved by clearly articulating the functioning of AI systems, including their decision-making processes, to establish trust and comprehension. -

03. Empathy

The empathy principle in responsible AI underscores the significance of considering the emotional and practical requirements of individuals impacted by AI systems. It advocates for a design approach centered on humanity, acknowledging the varied perspectives, experiences, and worries of end-users. In practice, it involves diverse stakeholders, including end-users, in the design process to understand and address their concerns and generalize it to the public. -

04. Robustness

Robustness is enabling the AI system to be resilient, reliable and stable across and time and being strong enough to complete its task at any particular adverse situation. Robust AI systems would be capable of maintaining their functionality and accuracy, minimizing the risk of errors or disruptions. This output can be brought and maintained in the AI system by feeding algorithms that can perform consistently and efficiently in case of unexpected inputs or any uncertainties. -

05. Accountability

Organizational culture plays a pivotal role in fostering accountability in AI wherein individuals across different departments and functions would be collectively upholding a high standard of accountability for themselves and the AI technologies they use. It brings in responsibility and ethics in what they do to the AI tech and the humans using them.

Ways to Implement Responsible AI

Simply knowing the need, consequences and principles of responsible AI doesn’t make a change. Practically implementing it brings in the fruitful result of upbringing a better future. Thus, executing it is also equally important as developing and strategizing it.

One of the most effective ways is to use a human centered design approach. Human-Centered Design (HCD) in responsible AI involves prioritizing the needs, experiences, and well-being of humans throughout the design and development process of AI systems. Which shows us that it is understanding the perspectives of end-users and integrating their feedback to create ethical, user-friendly AI technologies that align with human values.

Another approach to implement responsible AI is by continuously examining your data and identifying the limitations of the dataset. Our AI outputs reflect on the data they are trained upon. Therefore, it is crucial to examine our data whenever possible without interfering with privacy or confidentiality. It is needful in order to identify and mitigate potential biases, inaccuracies, or ethical concerns present in the data. Similarly, by identifying the limitations in our dataset, it can be ensured that the AI model is trained on representative, diverse, and fair data, contributing to more reliable and unbiased outcomes.

Finally, for the purpose of the right implementation, it has to be tested and updated after deployment. Continuous monitoring and timely updation is mandatory to ensure the judicious use of the developed AI technology. Various test practices and quality control methods have to be deployed to ensure the AI is working responsibly.

Following the completion of testing, if any anomalies are detected, the system should undergo upgrades, and essential modifications need to be incorporated which would help in attaining the goal of making the artificial intelligence responsible as well as flawless for the beneficial use of stakeholders and for a better tomorrow.

Challenges and Risks in Responsible AI

Challenges are unavoidable in any phase of life let alone responsible AI. We have talked about the principles, implementation and utilization of AI in a responsible way and keeping up with this itself is the challenge that is being faced with.

"Success is not the end, failure is not fatal: What matters truly is the courage to persist and move forward”. This quote is something to be kept in mind throughout this challenging journey of responsible AI and beyond.

One of the prominent challenges is of privacy protection and ensuring security. In this world of open data, it is quite difficult to maintain confidentiality. But ensuring that to the users is a greater challenge which calls for the need of giving a true promise.

Another significant issue is bias, a concern we've witnessed from the outset of this article. As humans, experiencing bias or discrimination is something we vehemently oppose and is intolerable. Therefore, if a machine produces biased results, it is likely to be perceived as an untrustworthy and unreliable source. To ensure the effectiveness and positive impact of AI technologies and reach its higher goal, it's crucial to minimize bias as much as possible.

Setting proper and adequate rules and policies that are applicable, reliable and beneficial is an indispensable matter to be implemented. Without setting such laws, we cannot make sure that the AI system is ethical towards humanity and is exercising within its boundary. So hiring experts and bringing in proper analysis and decision-making is how we face this challenge is developing responsible AI.

Benefits and Real-life Examples

Whether it be users, employees, stakeholders or even the government demands responsible AI which definitely proves that the merit of responsible AI is inevitable.

Firstly, a company’s success majorly comes from the innovations and competitiveness it holds; which is what makes responsible AI beneficial by bringing in extraordinary innovations leading to qualitative competitions which helps in providing unique services which is in demand today.

Similarly, as mentioned earlier, it is crucial to face the challenge of ethics and legality in AI, so when responsible AI is implemented, companies minimize potential risks. This involves implementing policies, governance structures and fostering a cultural shift to ensure AI systems align with organizational values. A well-executed responsible AI program helps in proactively identifying and addressing issues before deploying the system, reducing the occurrence of failures. Even when failures occur, the program ensures their impact is minimized, resulting in less harm to individuals and society.

Moreover, aligning AI resources in every aspects of the company from mission to its ethics, attaining goals becomes much more easier, less time-consuming and more perfect, error-free and efficient. To deeply engrave the need of responsible AI into our minds, let’s see how its usage brought potential transformations in the real-world. Microsoft has established a responsible AI governance framework in partnership with its AI, Ethics, and Effects in Engineering and Research Committee, along with the Office of Responsible AI (ORA). These groups work collaboratively to propagate and enforce the defined values of responsible AI within Microsoft. There was an evolution of committed and innovative leadership, were able to build inclusive governance models and it enabled to invest in and empower their employees.

Another notable example of the successful application of responsible AI is in the healthcare sector, specifically the use of AI for cancer detection. IBM's Watson for Oncology, a cognitive computing system, has been employed to assist oncologists. It uses AI algorithms to analyze vast amounts of medical literature, clinical trial data, and patient records. It assisted oncologists in identifying potential treatment options by providing evidence-based insights. The transformations it brought were personalized treatment plans, enhanced decision support, patient centric approach, better consistency and accuracy which presented a global impact paving way to an outstanding success.

Conclusion

The imperative for responsible AI is undeniable. As artificial intelligence becomes increasingly intertwined with our daily lives, the ethical considerations surrounding its development and deployment become paramount. Responsible AI practices not only mitigate risks and biases but also foster trust, transparency, and positive societal impact. Embracing responsible AI is not merely a choice but a moral obligation, ensuring that advancements in technology align with human values, respect individual rights, and contribute to a more equitable and inclusive future.