Businesses rely heavily on accurate and reliable information to make informed decisions. The Extract, Transform, Load (ETL) process is a critical step in managing data, moving it from various sources, transforming it into a usable format, and loading it into a target system like a data warehouse. However, without proper data quality checks, this process can introduce errors, inconsistencies, and incomplete data, leading to flawed analytics and poor business outcomes. This article explores why data quality checks are essential in ETL, the key types to implement, and practical steps to ensure data quality in ETL, helping you build a robust data pipeline.

Why Data Quality Checks Matter in ETL?

Data quality checks are like a safety net for your ETL pipeline. They ensure that the data moving through the extraction, transformation, and loading stages remains accurate, complete, and reliable. Without these checks, small issues can snowball into big problems, affecting everything from business reports to customer insights.

Imagine a retail company analyzing sales data to plan its next marketing campaign. If the ETL process misses duplicate customer records or leaves out key fields like purchase dates, the analysis could overestimate demand or target the wrong audience. This is where ETL data validation becomes vital. It catches these errors early, saving time, money, and reputation.

The importance of data quality in ETL goes beyond avoiding mistakes. High-quality data drives better decision-making, improves operational efficiency, and ensures compliance with regulations, especially in industries like finance and healthcare. Poor data quality can lead to financial losses, damaged customer trust, and even legal penalties. According to Gartner, 80% of digital organizations may fail due to inadequate data governance, highlighting the need for strong data quality checks.

The Risks of Ignoring Data Quality

When data quality in ETL is neglected, several issues can arise:

- Inaccurate Insights: Missing or incorrect data can skew analytics, leading to wrong business strategies.

- Operational Delays: Errors in the ETL process can halt workflows, requiring manual fixes that waste time.

- Compliance Risks: Regulatory bodies demand accurate data handling, and poor quality can result in fines.

- Cost Overruns: Fixing data issues after loading is far more expensive than catching them early.

For example, a healthcare provider relying on ETL to manage patient records might face serious consequences if NULL values in critical fields like medication history go unnoticed. This underscores why data quality checks are non-negotiable in ETL projects.

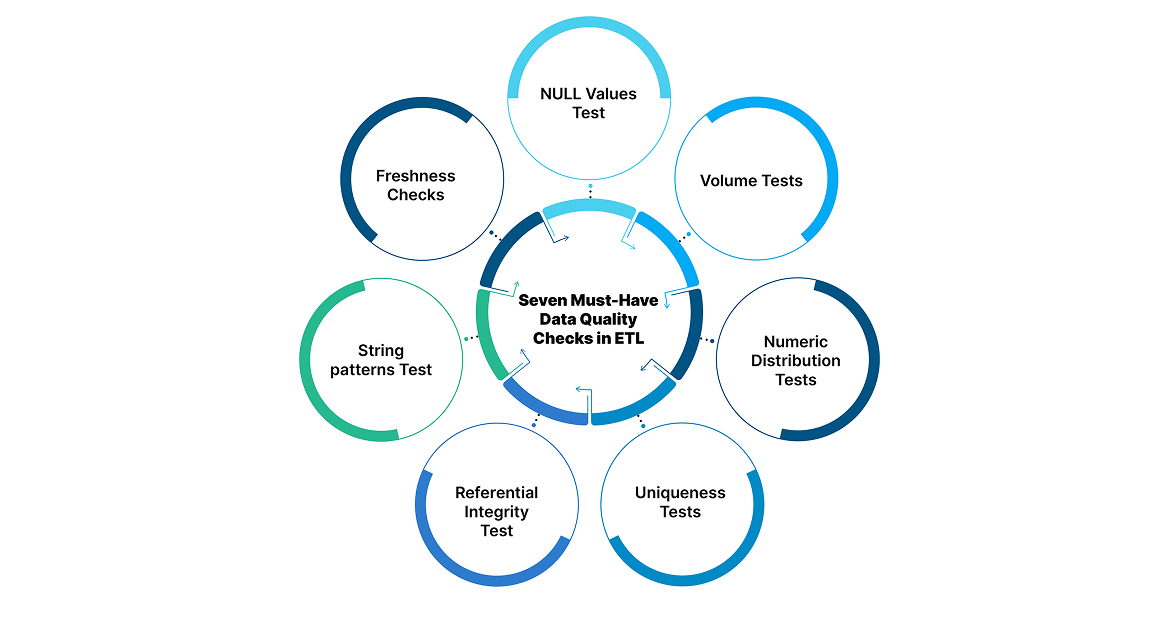

Seven Must-Have Data Quality Checks in ETL

To maintain data quality in ETL, data engineers should incorporate the following seven checks during the ETL process. Each addresses a specific aspect of data integrity and reliability.

-

NULL Values Test

This check identifies missing data in required fields. For instance, if a customer database lacks email addresses, it can disrupt marketing efforts. A simple SQL-based test, like dbt’s not_null test, scans for NULL values and flags them for review. However, manually fixing numerous NULLs can be time-consuming, so automation is key. -

Volume Tests

Volume tests ensure the data load matches expected quantities. A sudden drop from 200 to 2 rows or a spike to 2,000 could signal a collection error. This check helps detect issues like misconfigured systems, ensuring the ETL pipeline processes the right amount of data. -

Numeric Distribution Tests

This test verifies that numeric data, such as prices or ages, follows a logical distribution. Skewed data, an influx of unrealistic ages due to a vendor error, can mislead analyses. Tools like dbt’s accepted_values test can set acceptable ranges, though checking multiple fields manually can be challenging. -

Uniqueness Tests

Duplicate records, like multiple entries for the same customer, can distort reports. Uniqueness tests identify and remove these duplicates, ensuring data integrity. However, large datasets with many fields to check can make this process complex. -

Referential Integrity Test

This ensures foreign keys in one table match primary keys in another, maintaining relationships. For example, an “orders” table must link correctly to a “customers” table via customer IDs. Known as the “relationships test” in dbt, this check prevents broken data links. -

String patterns Test

String data, like email addresses or phone numbers, should follow expected formats. Regular expressions can validate these patterns, flagging invalid entries. Yet, large datasets with numerous string fields can slow down this process. -

Freshness Checks

Freshness checks confirm data is up-to-date, using timestamps to monitor updates. Outdated data can mislead decisions, especially in fast-changing environments. These checks can be manual or automated via ETL tools, though frequent updates from multiple sources add complexity.

Challenges in performing Data Quality Checks

While data quality checks are essential, they come with challenges. Manual testing becomes impractical as data volumes grow, requiring constant updates to tests. For instance, a NULL values test might need adjustment if new fields are added. Volume tests struggle with fluctuating baselines, and numeric distribution tests can be slow with many fields. Referential integrity and string pattern checks face difficulties with multi-source data, while freshness checks complicate frequent updates.

These hurdles often lead to incomplete testing, leaving gaps in data quality in ETL.

Data Observability: A Modern Approach

As ETL pipelines grow complex, traditional data quality checks may miss “unknown unknowns”, unpredictable issues that automated tests can’t catch. Data observability, powered by tools like Monte Carlo, uses machine learning to detect anomalies in real-time, resolve them, and prevent future incidents. Unlike manual testing, it provides a holistic view of data health.

For example, if a sudden data volume drop signals a collection failure, observability tools can alert engineers instantly, reducing downtime. This proactive approach ensures data quality in ETL without the constant need for new test scripts.

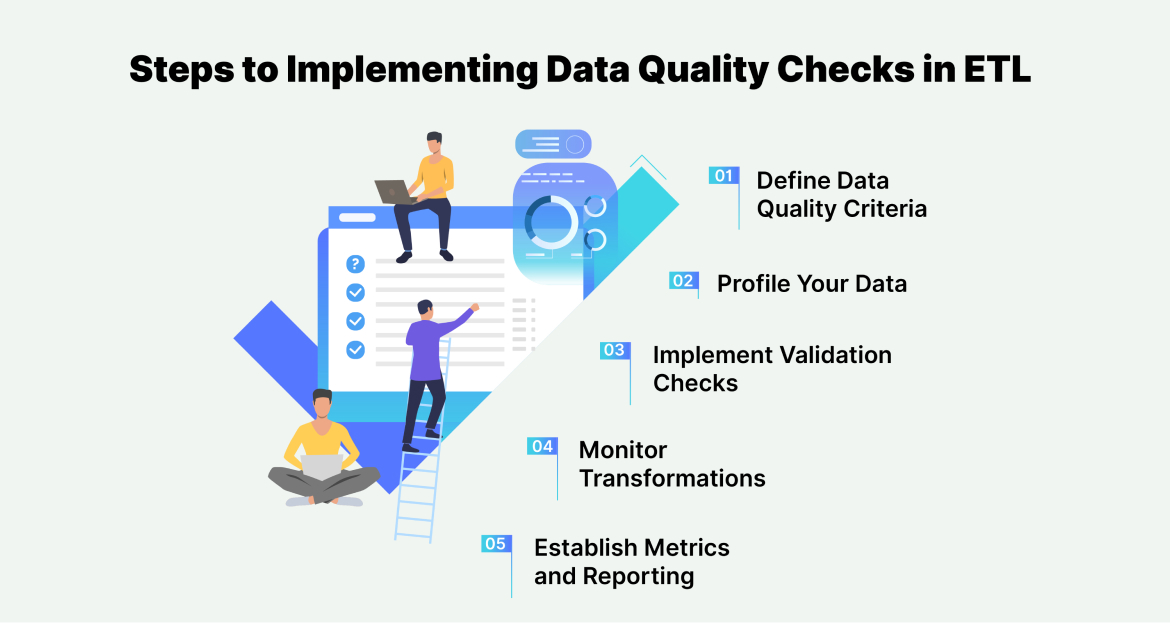

How to Implement Data Quality Checks in ETL: A Step-by-Step Guide

Implementing effective data quality checks requires a structured approach. Here’s a five-step guide to get started:

-

Define Data Quality Criteria

Start by setting clear standards for accuracy, completeness, consistency, and timeliness. For example, decide that no more than 5% of records can have NULL values. -

Profile Your Data

Use data profiling to analyze structure, patterns, and anomalies. Tools like Talend or DataCleaner can highlight outliers, helping you understand data quality before transformation. -

Implement Validation Checks

Add checks like NULL tests and referential integrity at each ETL stage. Automate where possible with tools like dbt or Informatica to catch issues early. -

Monitor Transformations

Track data changes during transformation with real-time monitoring. This ensures data quality in ETL remains intact, flagging errors like format mismatches instantly. -

Establish Metrics and Reporting

Define KpIs like error rates or freshness scores, and generate reports to track trends. Regular reviews help refine the process and maintain high standards.

Best practices for ETL Data Quality Checks

To get the most out of data quality checks, follow these practical tips to keep your ETL process strong and reliable:

- Set Clear Metrics: Write down simple standards for things like accuracy and how up-to-date your data should be. This gives everyone a clear target to aim for during testing. For example, decide that no more than 2% of records can be incomplete.

- Automate Testing: Use tools like Apache Nifi or Talend to run tests automatically. This cuts down on mistakes humans might make and saves time, letting you focus on bigger tasks instead of checking everything by hand.

- Involve Stakeholders: Work closely with business teams, like marketing or sales, to make sure the checks match what they need. Their input helps ensure the data supports real business goals.

- Monitor Continuously: Set up observability tools to watch your data all the time. This helps you spot problems, like missing data, right away, before they cause bigger issues.

- Document Everything: Keep a record of all tests, fixes, and results. This creates a clear history, making it easy to track what’s been done and prove everything is in order during audits.

- Ensure Compliance: Make sure your checks follow industry rules and regulations, like data privacy laws. This avoids legal trouble and keeps your business safe.

Real-World Examples of Data Quality Checks

These real-life cases show how data quality checks solve problems and drive success:

- Duplicate Detection: A retail chain found duplicate customer profiles using uniqueness tests. By merging them, they improved their marketing campaigns, reaching the right customers without wasting effort.

- Format Validation: An e-commerce site checked that product IDs followed the correct format. This stopped pricing mistakes, ensuring customers saw accurate costs and boosting trust.

- Freshness Checks: A financial firm used freshness checks to keep trading data current. This met regulatory deadlines, helping them avoid penalties and make timely decisions.

- Referential Integrity: An online store fixed broken links between customer and order data. This improved reporting accuracy, making it easier to track sales and inventory.

These examples prove that data quality checks can tackle everyday challenges, leading to better business outcomes.

Conclusion

Data quality checks are the backbone of a reliable ETL process. They ensure accuracy, completeness, and timeliness, preventing costly errors and supporting informed decisions. By implementing the seven key checks, NULL values, volume, numeric distribution, uniqueness, referential integrity, string patterns, and freshness, along with data observability and best practices, businesses can achieve high data quality in ETL.

A well-designed testing framework, supported by the right tools and metadata, scales this effort, making data a trusted asset for growth.