Data is no longer big; it is becoming unmanageable in the present standards. Conventional analytics are starting to show cracks under pressure as organizations innovate the boundaries of decision-making. This paradigm is leading to quantum analytics, a fundamentally new technology that applies the laws of quantum mechanics to solve complexity at scale. Quantum computing in big data is taking us into the realm of next-gen data analytics, where speed, depth, and precision transform the nature of decision making in every industry.

What Makes Quantum Analytics Different from Classical Methods?

The difference between classical and quantum analytics lies in a change in the processing and interpretation of information. Quantum analytics is redefining the very principles of computation in the following way:

Data transmission in classical systems is done bit by bit, step by step, over deterministic pathways that are linear, and this makes scalability a bottleneck. Quantum systems, on the other hand, employ superposition, which means that a single qubit can be in 0 and 1 at the same time. This opens up a kind of quantum parallelism in which lots of possible lines of calculation can be checked in parallel, accelerating analysis through such huge bodies of data to speeds that were previously unheard of.

More dramatic still is entanglement: qubits get so intertwined that the state of one instantly echoes the state of another. This creates emergent associations on complicated forms of information that classical analytics often tend to miss.

Key Differentiators at a Glance:

- Parallelism, not sequenced steps: Quantum systems capitalize on superposition, testing a large number of possibilities simultaneously, and entanglement whereby connected understanding of variables is scaled.

- Dramatic speed boosts: Some quantum algorithms provide an exponential speedup over their classical counterparts particularly pattern recognition, optimization, or searching unstructured data.

- New analytical depths: Interference principle of quantum computing enhances the accuracy of results by increasing correct results and damping irrelevant ones. The combination of these forces gives quantum analytics the ability to find patterns and relationships at a granular level that is hard to beat in classical models.

The Role of Quantum Computing in Big Data Transformation

The classical systems are being stretched to their limits as data environments swell in complexity and size. Quantum analytics can take a step forward with qubits, superposition, and entanglement that can be used to make decisions that are otherwise out of reach.

This transition is not imaginary. Say, for an example, by using quantum phenomena like superposition and entanglement, analytics processes that could run days in conventional systems can now be run in minutes or even seconds through massively parallel processing paths.

- Exponential speed-ups: Certain quantum algorithms have a logarithmic growth in scaling with data size and dimensionality, which gives the possibility of an enormous exponential speed-up versus classical algorithms.

- Deep insight: Where tremendous amounts of transactional data or sensor data are involved, quantum computing in big data can uncover complex relationships that can be used to make more intelligent risk models, identify fraud, and discover patterns in a few seconds.

- Efficiency gains: According to preliminary benchmark results, it is seen that it can process up to 70% faster and compute 30% more efficiently at scale across analytics applications.

Collectively, these capabilities make next-gen data analytics not only faster, but different from the game changer in how organizations derive meaning out of the data deluge. Integration of quantum analytics is a strategic inflection point because it can change how certain industries with high-stakes decisions operate.

Industry Use Cases: Where Quantum Analytics Is Making a Mark

Quantum analytics is quietly materializing sector by sector, not with a bang but with a powerful tide of data and transformation. The rest will not be speculative fiction, but a glimpse of the industries already testing and gaining access to next-level data insights.

-

Finance and Risk Management

- In major banks and investment companies, quantum analytics algorithms are under trial to transform quantum computing into big data risk modelling, portfolio optimization, and fraud detection. The benefits are large: accuracy of risk modeling has grown by 35%, the success of fraud detection by 45%, and portfolio optimization times have been reduced by 70%.

- Large organizations such as JPMorgan Chase have already made breakthroughs, such as the “certified randomness” generation through quantum circuits that are important in cryptography and even the training of predictive models' speedups.

- Transport, Logistics & Smart Infrastructure

Quantum computing with big data has been used to optimize real-time routes in live pilot production. A particularly impressive Lisbon pilot used live traffic to reroute buses in a way that was more efficient and used quantum processors to make payload decisions in real time.

Supply chains are also being remodeled; new generations of data analytics models powered by quantum techniques are saving transportation, increasing forecasting accuracy, and minimizing downtime. Some of the savings are in the 30-45% range, depending on the application.

- Healthcare & Genomics

At recent quantum conferences, researchers demonstrated how quantum analytics are speeding the modeling of the interactions between drugs and ensuring better design of clinical trials. The initial prototypes are already being used to make imaging safer through quantum sensors.

- Semiconductors and Material Design

A new quantum-machine-learning method, Quantum Kernel-Aligned Regressor (QKAR), that has been developed in semiconductor research has enhanced the modeling of efficiency by 20% in predicting vital chip design features based on small data samples.

Data Privacy, Encryption, and Quantum Security

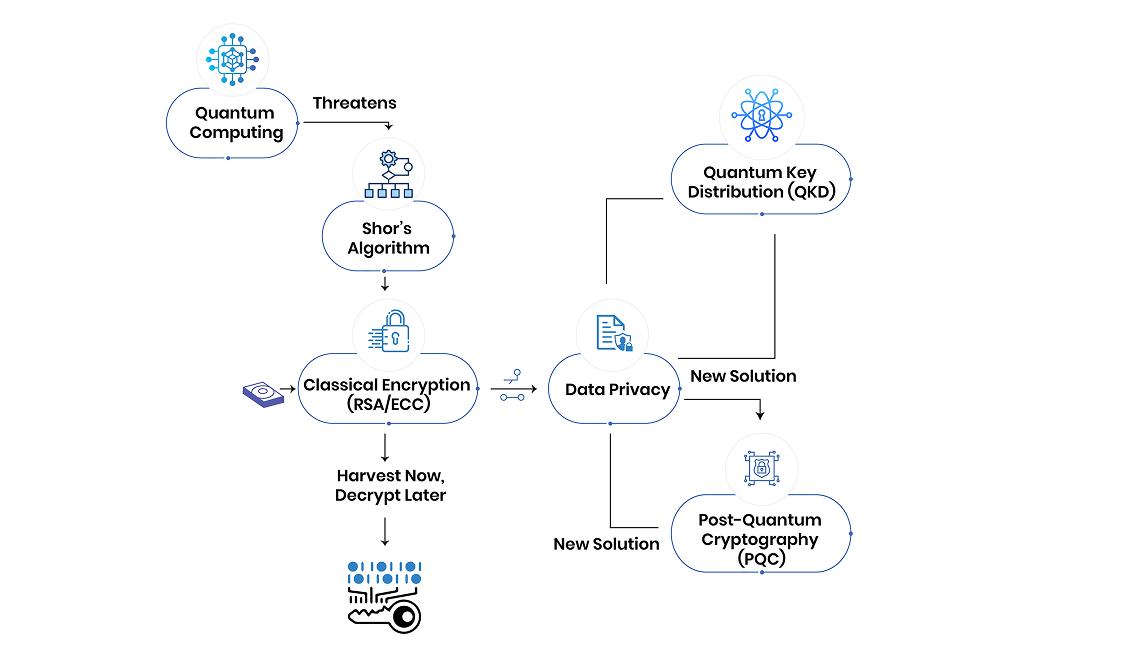

Quantum computing is not merely an accelerator to analytics; it is a disruptor to the mathematics used in current encryption. Protocols based on RSA and ECC are especially vulnerable if a scalable quantum computer arrives Shor’s algorithm can crack them rapidly, potentially in minutes or hours. In the meantime, harvest now, decrypt later, the idea that adversaries will encrypt their data today and decrypt it later is already a known vulnerability.

Core Adaptations for Secure Analytics

- Quantum key distribution (QKD) brings a new standard: Any eavesdropping action will affect the quantum states involved in key exchange, and the parties will notice.

- Post-quantum cryptography (PQC) introduces new public-key protocols (with resistance against quantum attacks) that are currently standardized by organization's such as NIST.

A recent survey by Information Systems Audit and Control Association (ISACA) revealed that 62% of security experts are concerned about the impact of quantum on internet encryption, but the majority of companies have no clear strategies on how to deal with the same.

Skill Shifts and Tools: What the Analytics Workforce Needs Next

The quantum age will not necessitate changing the workforce by adding more of the same. Instead, it will transform how data professionals approach analysis through the use of quantum analytics. This process starts with the combination of old-time strengths and new areas:

The level of sophistication required of analytical teams is now expected to understand quantum computing in big data, a change that does not always require a physics PhD. Less than half of all quantum jobs will demand a degree, and in many positions, flexibility and curiosity are more important than hyper-specialization.

Emerging essentials include:

- Quantum algorithm fluency: The paradigm of qubit-based processing and its way of re-interpreting optimization or correlation mining.

- Foundational quantum theory: A practical understanding of superposition, entanglement, and qubit behavior assists analysts in modelling business problems in a quantum-ready form.

- Interdisciplinary collaboration: A combination of computer science, math, and physics experts, along with the ability to break silos, is in high demand.

Why it matters: Since next-gen data analytics use quantum speed and scale, these hybrid talents will be the foundation of organizational preparedness. It is the ability to shift that allows the shift of familiar tools to genuinely disruptive insights to take place, with the resulting analytics teams having the ability not only to provide results but to reinvent them.

Conclusion

Quantum analytics represents more than just an upgrade in big data; it’s a paradigm shift. With computation speeds hundreds of times faster than classical systems, it makes once-unsolvable problems attainable. Beyond speed, it reshapes security frameworks, redefines workforce skills, and enables truly scalable systems. For industries seeking faster and smarter decision-making, quantum analytics marks the beginning of a new era.