Docker has transformed how data engineers work, making it easier to build, test, and deploy data pipelines. Instead of struggling with "it works on my machine" issues, Docker lets you package your code, tools, and dependencies into lightweight containers that run the same everywhere—your laptop, a server, or the cloud. For data engineers, Docker for data engineering is a game-changer, ensuring reproducible environments and smooth collaboration.

In this article, we’ll explore 10 essential Docker commands every data engineer needs to know. These commands will help you manage containers, debug pipelines, and optimize resources, making your data engineering workflows faster and more reliable. Whether you’re new to Docker or looking to level up, let’s dive in!

What is Docker and Why Do Data Engineers Need It?

Docker is a tool that packages applications and their dependencies—like Python, Spark, or database connectors—into containers. These containers are portable, lightweight, and run consistently across different environments. For data engineers, Docker solves common problems like:

- Environment Inconsistencies: No more mismatched software versions between development and production.

- Dependency Conflicts: Containers isolate tools, preventing version clashes.

- Slow Setups: Pre-built images save hours of manual configuration.

- Collaboration Challenges: Share containers to ensure everyone uses the same pipeline setup.

Before we jump into the commands, let’s cover some key Docker terms:

- Docker Image: A snapshot of an environment with all dependencies.

- Docker Container: A running instance of an image.

- Dockerfile: A script to build custom images.

- Docker Hub: A registry for sharing and downloading images.

To get started, install Docker Desktop from Docker’s official website and verify it with:

docker --version

You can also use Visual Studio Code with the Docker extension for easier management.

Why Docker is a Must for Data Engineers

Docker for data engineering offers huge benefits:

1. Reproducibility

Docker ensures your data pipeline works the same in development, testing, and production. Imagine building a pipeline with Python, Spark, and specific libraries. Without Docker, setting up the same environment on another machine can lead to errors due to version mismatches. Docker packages everything—code, tools, and configurations—into a container. You can run this container anywhere, and it behaves the same.

For example, a container with Airflow and PostgreSQL runs identically on your laptop or a cloud server. This eliminates the “it works on my machine” problem, saving hours of troubleshooting. Data engineers can share containers with teammates, ensuring everyone uses the exact setup. Reproducibility makes pipelines reliable and speeds up deployment, letting you focus on building great data solutions.

2. Isolation

Docker keeps tools and libraries separate, avoiding conflicts that can break pipelines. Data engineers often work with multiple tools, like Python 3.8 for one project and Python 3.9 for another. Without Docker, installing both on the same machine can cause issues, as libraries might clash. Docker’s containers isolate each project’s dependencies.

For instance, you can run a container with Python 3.8 and pandas 1.5 for an ETL job and another with Python 3.9 and pandas 2.0 for analytics, without interference. This isolation is crucial when using complex tools like Spark or TensorFlow, which have specific version needs. By keeping environments separate, Docker prevents errors and makes testing easier. You can experiment with new tools in a container without risking your main setup, giving data engineers confidence to innovate without breaking existing pipelines.

3. Scalability

Docker makes it simple to scale data pipelines, especially when paired with tools like Kubernetes. Data engineers often deal with growing datasets or sudden workload spikes. Docker containers are lightweight, so you can quickly spin up more containers to handle extra tasks.

For example, if your Spark pipeline needs more processing power, you can add containers to distribute the work. Kubernetes automates this, managing containers to balance loads and ensure efficiency.

Unlike traditional setups, where scaling might mean hours of server configuration, Docker lets you scale in minutes. Containers also use fewer resources than virtual machines, saving costs. Whether you’re processing massive CSV files or running real-time analytics, Docker’s scalability helps data engineers keep pipelines fast and responsive, even as demands grow, without complicated manual setups.

4. Collaboration

Docker simplifies teamwork by letting data engineers share consistent environments. Without Docker, sharing a pipeline means sending long setup instructions, which can lead to errors if someone misses a step. With Docker, you package your pipeline—code, libraries, and configurations—into a container and share it via Docker Hub. Teammates can run the container with a single command, getting the exact setup instantly.

For example, if you build a pipeline with Airflow and PostgreSQL, your team can use the same container to test or deploy it, avoiding version mismatches. This makes collaboration faster and reduces delays. Data engineers can also share images with clients or other teams, ensuring everyone works on the same foundation. Docker’s collaboration power helps teams stay aligned, deliver projects on time, and focus on solving data challenges together.

Compared to virtual machines, Docker is lighter, using the host’s kernel for efficiency. For example, running Spark on a VM might need a full OS, while a Docker container only needs Spark and its dependencies.

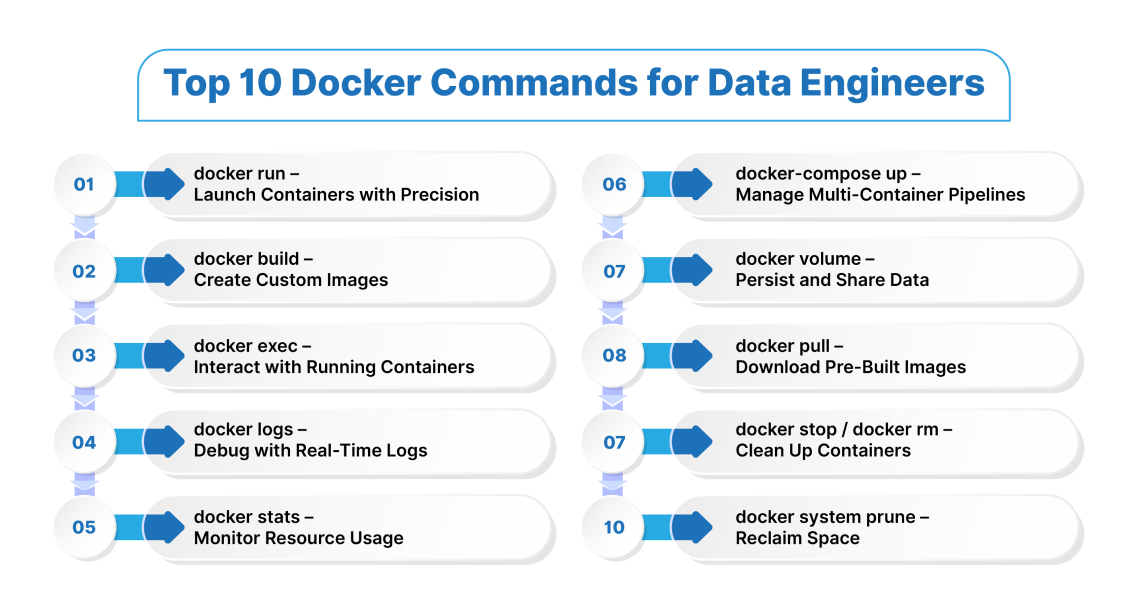

Top 10 Essential Docker commands

Now, let’s explore the essential Docker commands for data engineering!

1. docker run – Launch Containers with Precision

The docker run command creates and starts a container from an image. It’s the starting point for most data engineering tasks, like launching a database or running a data pipeline.

Example:

docker run -d --name postgres -e POSTGRES_PASSWORD=secret -v pgdata:/var/lib/postgresql/data -p 5432:5432 postgres:15

Why It’s Important for Data Engineers:

- Launches tools like PostgreSQL, Spark, or Jupyter for data processing.

- Flags like -d (detached mode), --name (custom name), -e (environment variables), -p (port mapping), and -v (volumes) give you control.

- Persists data with volumes, preventing loss when containers stop.

Pro Tip: Always use volumes (-v) for databases to save data, like pgdata:/var/lib/postgresql/data. Without it, your data disappears when the container stops!

2. docker build – Create Custom Images

The docker build command turns a Dockerfile into a reusable image, letting you customize environments for your pipelines.

Example:

# Dockerfile

FROM

python:3.9-slim

RUN pip

install

pandas numpy apache-airflow

docker build -t custom_airflow:latest

Why It’s Important for Data Engineers:

- Builds images with tools like Airflow or PySpark, ensuring consistency.

- Eliminates manual setup for complex dependencies.

- Speeds up team onboarding—share the image, and everyone’s ready.

Pro Tip: Use multi-stage builds to keep images small:

FROM

python:3.9 AS builder

WORKDIR /app

COPY . .

RUN pip

install

pandas

FROM python:3.9-slim

COPY --from=builder /app /app

CMD ["python", "script.py"]

3. docker exec – Interact with Running Containers

The docker exec command runs commands inside a container, perfect for debugging or testing.

Example:

docker exec -it postgres psql -U postgres

Why It’s Important for Data Engineers:

- Run SQL queries, check configs, or test scripts without restarting containers.

- The -it flags enable interactive terminal access.

- Safer than docker attach, which can disrupt container output.

Pro Tip: If bash isn’t available, try:

docker exec -it my-container sh

4. docker logs – Debug with Real-Time Logs

The docker logs command shows a container’s logs, helping you troubleshoot pipeline failures.

Example:

docker logs --tail 100 -f airflow_scheduler

Why It’s Important for Data Engineers:

- Streams logs in real-time (-f) to catch errors in Airflow DAGs or Spark jobs.

- Limits output with --tail for faster debugging.

- Ensures apps log to stdout/stderr for visibility.

Pro Tip: For Python apps, configure logging to stdout:

import

logging

logging.basicConfig(level=logging.INFO)

print("Pipeline running!")

5. docker stats – Monitor Resource Usage

The docker stats command displays live CPU, memory, and network usage for containers.

Example:

docker stats postgres spark_master

Why It’s Important for Data Engineers:

- Identifies resource-hogging containers, like a Spark job eating CPU.

- Helps optimize pipelines for better performance.

- Monitors multiple containers at once.

Pro Tip: Filter for specific containers:

docker stats $(docker ps --filter "name=myapp" -q)

6. docker-compose up – Manage Multi-Container Pipelines

The docker-compose up command starts multiple containers defined in a docker-compose.yml file.

Example:

# docker-compose.yml

services:

airflow:

image:

apache/airflow:2.6.0

ports:

- "8080:8080"

postgres:

image:

postgres:14

volumes:

- pgdata:/var/lib/postgresql/data

docker-compose up -d

Why It’s Important for Data Engineers:

- Simplifies complex pipelines with tools like Airflow, PostgreSQL, and Redis.

- Automates setup with one command.

- Cleans up with docker-compose down --volumes to remove containers and data.

Pro Tip: View logs for all services:

docker-compose logs -f

7. docker volume – Persist and Share Data

The docker volume command manages storage for containers, ensuring data persists.

Example:

docker volume create etl_data

docker run -v etl_data:/data -d my_etl_tool

Why It’s Important for Data Engineers:

- Saves critical data like CSVs or database tables when containers stop.

- Shares data between containers, like Spark and Hadoop.

- Prevents data loss in production pipelines.

Pro Tip: List volumes to check storage:

docker volume ls

8. docker pull – Download Pre-Built Images

The docker pull command fetches images from Docker Hub or other registries.

Example:

docker pull apache/spark:3.4.1

Why It’s Important for Data Engineers:

- Saves time with pre-built images for Spark, Kafka, or Jupyter.

- Ensures you’re using optimized, up-to-date images.

- Supports specific versions with tags (e.g., :3.4.1).

Pro Tip: Verify image details:

docker inspect apache/spark:3.4.1

9. docker stop / docker rm – Clean Up Containers

The docker stop and docker rm commands stop and remove containers

Example:

docker stop airflow_worker

docker rm airflow_worker

Why It’s Important for Data Engineers:

- Frees resources during pipeline testing.

- Keeps environments clean by removing unused containers.

- docker stop allows graceful shutdown with SIGTERM.

Pro Tip: Stop all running containers:

docker stop $(docker ps -q)

10. docker system prune – Reclaim Space

The docker system prune command removes unused containers, images, and volumes.

Example:

docker system prune -a --volumes

Why It’s Important for Data Engineers:

- Clears disk space cluttered with old images and containers.

- Improves performance after weeks of testing.

- The -a flag removes all unused images, and --volumes deletes volumes.

Pro Tip: Remove only stopped containers:

docker container prune

Getting Started with Docker for Data Engineering

Ready to use Docker for data engineering? Follow these steps:

- Install Docker Desktop: Download from Docker’s website and check with docker --version.

- Explore Images: List available images with docker images.

- Pull an Image: Try docker pull python:3.8 for data processing.

- Run a Container: Start with docker run python:3.8.

- Install Tools: Use pip inside the container to add libraries like pandas.

-

Save Changes: Commit changes with docker commit

my-image:tag. - Build a Dockerfile: Create a custom image for your pipeline.

- Share Images: Upload to Docker Hub for team collaboration.

Real-World Example: Building a Data Pipeline

Imagine you’re a data engineer building an ETL pipeline with Airflow and PostgreSQL. Here’s how you’d use these commands:

- Pull images: docker pull apache/airflow:2.6.0 and docker pull postgres:14.

- Create a docker-compose.yml to define services.

- Start with docker-compose up -d.

- Check resources with docker stats.

- Debug Airflow with docker logs -f airflow_scheduler.

- Run queries in PostgreSQL with docker exec -it postgres psql -U postgres.

- Clean up with docker-compose down --volumes and docker system prune -a.

This setup ensures your pipeline runs consistently, scales easily, and avoids setup headaches.

Final Thoughts

Mastering these essential Docker commands empowers data engineers to build robust, reproducible data pipelines. From launching containers with docker run to cleaning up with docker system prune, Docker for data engineering streamlines workflows, saves time, and boosts collaboration. Whether you’re wrangling massive datasets or deploying ETL processes, these commands are your toolkit for success.

Ready to dive deeper? Download Docker Desktop and start experimenting today!