The digital world generates trillions of data bytes every second. Your smartwatch, social media feeds, and online shopping patterns contribute to this massive information stream. However, raw numbers mean nothing without professionals who can transform them into strategic insights.

Early forecasts predicted 11.5M global data jobs by 2026, and with BLS projecting 34% US growth through 2034, opportunities continue exploding worldwide. This reflects businesses aren't just collecting information anymore. They're building entire strategies around it.

The question isn't whether you should develop data science skills. It's which ones will future-proof your career.

Why are Data Science Careers in Such High Demand Right Now?

Three powerful forces are reshaping the job market.

First, the U.S. data analytics market is projected to generate 43.52 billion USD in revenue by 2030, reflecting a robust compound annual growth rate (CAGR) of 20.7% from 2025 to 2030. Second, machine learning job demand is expected to grow by 23% over the decade spanning 2022 to 2032. Third, 82% of leaders expect all employees to have basic data literacy, according to Tableau research.

Organizations desperately need professionals who can bridge the gap between raw information and business decisions. According to LinkedIn’s Emerging Job Reports, there is a shortage of over 150,000 data science professionals in the United States alone.

But, basic knowledge of Python and simple modeling won't be enough in this field. The bar has risen dramatically.

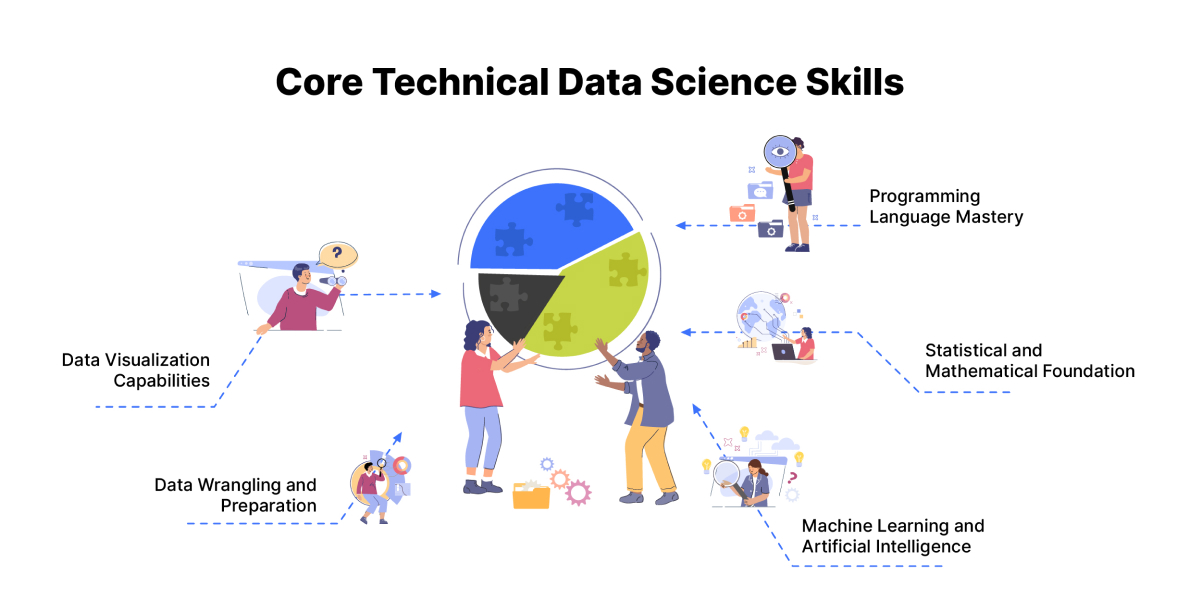

Core Technical Skills That Matter Most

These are the foundational, non-negotiable technical abilities every serious data professional must possess in 2026. They form the bedrock that everything else builds on.

Programming Language Mastery

Programming proficiency forms the foundation of data science practice. Python has been the most popular programming language for the past several years, with over 15.7 million developers using it worldwide. The language's extensive libraries including Pandas, NumPy, Scikit-Learn, and TensorFlow provide comprehensive tools for data manipulation, statistical analysis, and machine learning implementation.

R programming language maintains particular strength in statistical analysis and data visualization. Its specialized packages such as ggplot2 and dplyr enable sophisticated analytical workflows and detailed visual representations. SQL remains indispensable for database management, enabling efficient data retrieval, querying, and manipulation across relational database systems.

These programming languages serve distinct purposes within the data science workflow. Professionals must develop fluency across multiple languages to address diverse analytical requirements

Statistical and Mathematical Foundation

Advanced statistical and mathematical knowledge underpins all sophisticated data analysis. Probability theory enables professionals to understand distributions, make predictions, and quantify uncertainty. Statistical inference techniques including hypothesis testing and confidence intervals allow for rigorous validation of analytical findings.

Linear algebra provides the mathematical framework for machine learning algorithms, particularly those involving high-dimensional data and matrix operations. Calculus enables optimization of model parameters and understanding of gradient-based learning algorithms. Discrete mathematics supports algorithm development and computational thinking.

Without solid grounding in these mathematical and statistical concepts, professionals lack the ability to properly evaluate model validity, interpret results accurately, or recognize when analytical approaches may be inappropriate for specific problems.

Machine Learning and Artificial Intelligence

Machine learning has transitioned from a specialized research area to a core business capability. Professionals must understand supervised learning techniques, including classification and regression algorithms. Unsupervised learning methods such as clustering and dimensionality reduction address situations where labeled data is unavailable or impractical to obtain.

Deep learning represents a specialized subdomain focused on neural networks with multiple layers. Frameworks such as TensorFlow and PyTorch provide accessible implementations of complex neural network architectures.

Recent advancements illustrate this progress; for example, a 2025 published research paper demonstrated 95% accuracy of quantum algorithms in a pattern recognition experiment, over the classical algorithm level of 92%. This advancement illustrates how computational techniques continue to progress, requiring professionals to maintain current knowledge of methodological innovations.

Data Wrangling and Preparation

Data preparation typically consumes the majority of time in analytical projects. Raw data often includes missing values, inconsistent formatting, duplicate records, and various quality issues. Data wrangling encompasses the processes of cleaning, transforming, and organizing information into formats suitable for analysis.

Exploratory data analysis techniques help professionals understand data distributions, identify outliers, and recognize patterns before formal modeling begins. Tools like Pandas in Python streamline operations including filtering, aggregation, merging datasets, and reshaping data structures.

Professionals must develop systematic approaches to handling missing data, whether through deletion, imputation, or specialized modeling techniques. Feature engineering, the process of creating new variables from existing data, often provides substantial improvements in model performance. Understanding when and how to transform variables through scaling, normalization, or categorical encoding significantly impacts analytical outcomes.

Data Visualization Capabilities

Effective communication of analytical findings requires sophisticated visualization skills. Tools including Tableau, Power BI, Matplotlib, and Seaborn enable creation of charts, graphs, dashboards, and interactive visualizations that make complex information accessible to diverse audiences.

Visualization serves multiple purposes throughout the analytical process. During exploratory analysis, visualizations help identify patterns, outliers, and relationships. When communicating results, well-designed graphics convey insights more effectively than tables or text descriptions. Interactive dashboards enable stakeholders to explore data and understand findings at their own pace.

Professionals must understand principles of visual perception, color theory, and information design to create visualizations that accurately represent data while remaining interpretable. Poor visualization choices can mislead audiences or obscure important patterns, undermining otherwise solid analytical work.

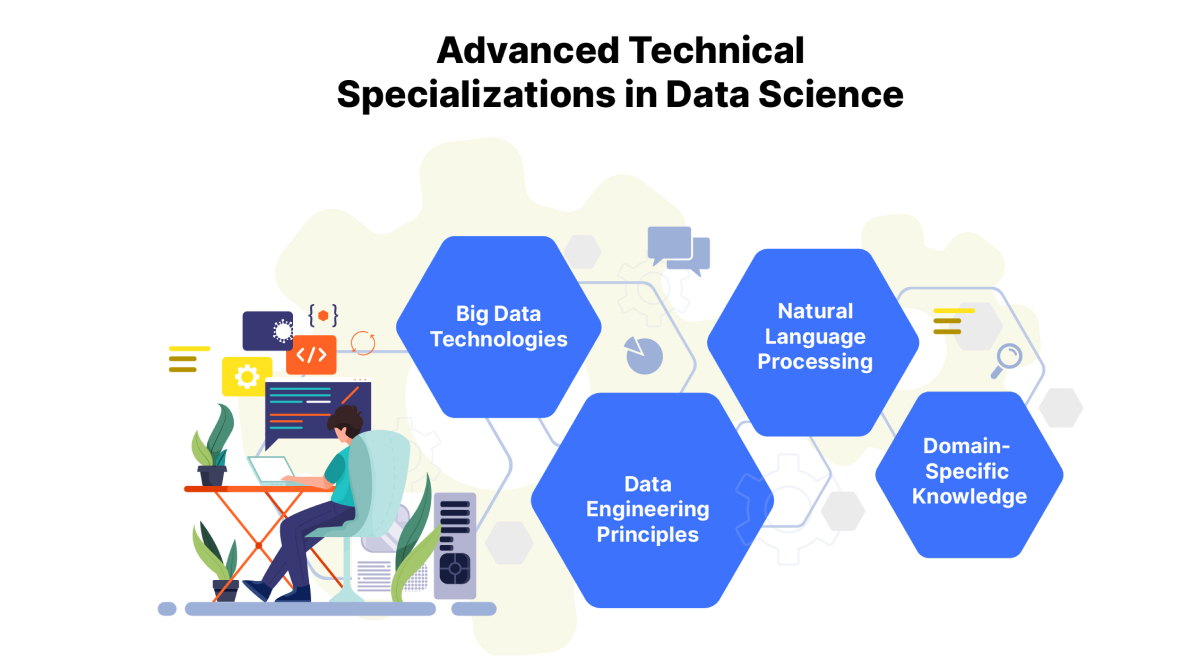

Advanced Technical Specializations

Beyond the basics, these high-leverage specialties separate good practitioners from the ones who drive real business transformation at scale.

Big Data Technologies

Traditional data processing tools cannot handle the volume, velocity, and variety of contemporary data sources. Apache Hadoop provides distributed storage and processing capabilities for massive datasets. Apache Spark offers faster in-memory processing suitable for iterative algorithms and real-time analytics.

Cloud platforms including Amazon Web Services, Google Cloud Platform, and Microsoft Azure provide scalable infrastructure for big data processing. Services such as AWS EMR, Google Cloud Dataproc, and Azure HDInsight enable organizations to process petabyte-scale datasets without maintaining physical infrastructure.

Stream processing frameworks like Apache Kafka handle real-time data flows, enabling immediate response to events and continuous analytics. Understanding distributed computing principles, data partitioning strategies, and fault tolerance mechanisms becomes essential when working at scale.

Data Engineering Principles

Data scientists who understand data engineering concepts deliver substantially greater value. Data pipelines automate the movement and transformation of data from sources through processing stages to final analytical destinations. Workflow orchestration tools like Apache Airflow coordinate complex sequences of data operations.

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes move data between systems while ensuring quality and consistency. Data scientists who can design efficient pipelines, optimize data storage formats, and implement appropriate data quality checks contribute more effectively to organizational objectives.

Understanding database design, indexing strategies, and query optimization enables professionals to work efficiently with large datasets. Knowledge of data warehousing concepts, dimensional modeling, and business intelligence architectures provides context for how analytical work fits into broader organizational data strategies.

Natural Language Processing

Text and speech data represent substantial untapped value for many organizations. Natural language processing techniques enable extraction of insights from documents, social media, customer feedback, and conversational interfaces. Applications include sentiment analysis, topic modeling, named entity recognition, and machine translation.

Libraries such as NLTK and spaCy provide foundational NLP capabilities. Transformer-based models including BERT and GPT have revolutionized language understanding tasks, achieving human-level performance on many benchmarks. Fine-tuning pre-trained language models for specific applications has become a standard approach.

Gartner predicts that at least 15% of routine work decisions will be made autonomously through agentic AI by 2028. Many of these autonomous systems rely substantially on natural language processing capabilities to understand instructions, extract information, and communicate results.

Domain-Specific Knowledge

Technical proficiency alone does not ensure success in data science roles. Deep understanding of specific industries enables professionals to formulate better questions, identify relevant variables, and generate actionable recommendations aligned with business objectives.

Healthcare data scientists must understand medical terminology, clinical workflows, regulatory requirements, and patient privacy considerations. Financial analysts require knowledge of market structures, risk management principles, and regulatory frameworks. Retail professionals benefit from understanding consumer behavior, supply chain dynamics, and seasonal patterns.

Domain expertise develops through immersion in specific industries, collaboration with subject matter experts, and continuous learning about sector-specific challenges and opportunities.

Critical Professional Skills

Technical mastery alone isn’t enough. These professional capabilities turn analysis into influence, insights into decisions, and data teams into strategic assets.

Communication and Presentation

Technical analysis produces value only when translated into formats that inform decisions and drive action. Data scientists must explain complex findings to executives, managers, and operational staff who lack technical backgrounds.

Written communication skills enable production of clear reports, documentation, and presentations. Verbal communication abilities support effective meetings, presentations, and collaborative discussions. Storytelling techniques help construct compelling narratives around data findings that motivate action and secure resources.

Analytical Problem-Solving

Data science projects begin with business problems rather than analytical techniques. Professionals must translate ambiguous business questions into well-defined analytical problems amenable to quantitative investigation.

Creative problem-solving involves generating multiple potential approaches, evaluating their feasibility and likely effectiveness, and adapting methods when initial approaches prove inadequate. Analytical rigor ensures that conclusions are supported by evidence and that limitations are acknowledged appropriately.

Collaboration and Teamwork

Contemporary data science projects involve cross-functional teams including engineers, analysts, product managers, and business stakeholders. Effective collaboration requires understanding different perspectives, respecting diverse expertise, and working toward shared objectives despite different priorities and constraints.

Data scientists must communicate technical requirements to engineers, explain analytical approaches to business stakeholders, and incorporate feedback from domain experts. This collaborative process often reveals important considerations that would be missed by individuals working in isolation.

Business Acumen

Aligning analytical work with organizational strategy requires understanding business operations, competitive dynamics, and financial constraints. Data scientists must evaluate whether analytical projects justify their costs through improved decisions, operational efficiencies, or competitive advantages

Recommendations must consider implementation feasibility, organizational capacity, and potential unintended consequences. By 2027, 60% of data and analytics leaders will encounter critical failures in managing synthetic data to AI governance, model accuracy, and compliance, according to Gartner 2025. This statistic underscores the importance of understanding governance, risk, and compliance considerations alongside technical implementation.

Continuous Learning and Adaptability

The pace of change is relentless. McKinsey reports that employees are now three times more likely to be using generative AI than their leaders realize, highlighting how rapidly the field transforms. New tools, techniques, and best practices emerge constantly. Professionals must maintain commitment to continuous learning through certifications, conferences, research papers, and practical experimentation.

Adaptability enables professionals to shift between different tools, programming languages, and methodological approaches based on project requirements. Flexibility in thinking allows incorporation of new techniques while maintaining appropriate skepticism about unproven methods.

Conclusion

Data science in 2026 demands comprehensive expertise spanning technical proficiency, domain knowledge, and professional capabilities. The field has matured beyond basic programming and statistical analysis to require sophisticated understanding of advanced methodologies, ethical considerations, and business strategy.

Demand for these skills is surging, roles are opening fast at organizations of all sizes, in all industries, and across nearly every business function. Compensation reflects the value that skilled professionals deliver through improved decisions, operational efficiencies, and competitive advantages. Growth potential extends from entry-level analytical roles through senior technical positions to executive leadership.

The pathway forward involves systematic skill development, practical experience, and sustained engagement with the evolving data science community. Organizations worldwide require professionals who can transform complex information into strategic direction. The opportunities exist for those willing to invest in developing the capabilities that this critical work demands.

Frequently Asked Questions

Q. What data science skills are most in demand for 2026?

A. Data science careers in 2026 require a balanced mix of technical expertise and professional skills. Core requirements include programming proficiency in Python, R, and SQL, strong foundations in statistics and mathematics, machine learning and AI capabilities, data wrangling and visualization skills, and growing familiarity with cloud, big data, and MLOps. Equally important are communication, business understanding, and strategic thinking.

Q. How can I future-proof my data science career?

A. Focus on a mix of technical depth (e.g., deep learning with TensorFlow/PyTorch) and soft skills like problem-solving, collaboration, and adaptability. Stay ahead by building domain knowledge in high-growth sectors like healthcare or finance, and commit to continuous learning amid rapid AI advancements.

Q. Is basic Python knowledge enough for data science jobs?

A. No, the bar has risen. Employers need proficiency across multiple languages (Python libraries like Pandas/NumPy, R for stats, SQL for databases) plus advanced math (linear algebra, probability) and ML techniques. Basic skills get you started, but comprehensive expertise commands premium roles.

Q. What role does DASCA certification play in mastering these skills?

A. DASCA certifications validate your technical foundation and advanced specializations, from machine learning to big data and NLP. They're designed for today's demands, helping you stand out in a market seeking proven pros who bridge data and business strategy.

Q. Are data science careers still in demand beyond 2026?

A. Yes. Demand for data science, analytics, and engineering professionals continues to grow across industries. Organizations rely on data-driven decision-making, AI adoption, and automation to remain competitive. This sustained demand extends from entry-level roles to senior leadership and executive positions.

Q. Do certifications help in advancing a data science career?

A. Yes. Certifications help signal verified competence, professional maturity, and commitment to ongoing development. Employer-recognized credentials are particularly valuable when transitioning between roles or moving into higher-responsibility positions. DASCA certifications are designed to align with career stages, from foundational roles to senior and leadership positions, making them especially relevant for long-term career progression in data-driven fields.