The data landscape has undergone a remarkable transformation over the past fifteen years. What once required managing a 1.5 PB data warehouse, which was an anomaly outside of Big Tech circles in 2010, has evolved into an era where managing tens or even hundreds of petabytes of data has become commonplace for large enterprises. Companies like Snowflake and Databricks now routinely handle exabytes of customer data. This explosive growth has fundamentally changed how organizations approach data management, moving from isolated data silos to integrated modern data architectures that treat data as valuable products.

The Evolution of Data Management

Understanding where we've come from helps clarify where we need to go. Back in 2010, storage was both slow and prohibitively expensive, often limited to large database servers connected over SAN to data appliances from vendors like EMC, HP, or Fujitsu. Procuring additional capacity could take months. Today, SSDs have become commodities, and expandable on demand object storage is available to virtually anyone at a fraction of the cost.

Networking capabilities were also severely limited. Most back office networks operated on 1G Ethernet, with 10G and Infiniband reserved for high performance clusters due to their high cost. In contrast, today's cloud infrastructure commonly utilizes 40G and 100G networks, drastically improving data throughput and latency.

The compute landscape has transformed equally dramatically. Large SMP servers from vendors like SUN, Fujitsu, and HP dominated data processing, and cluster computing was still in its infancy. Massive data warehouses relied on expensive MPP appliances such as Netezza, Vertica, or Greenplum. Advancements in distributed computing have now democratized access to scalable, high performance computing resources.

Understanding Data Silos and Their Challenges

Data silos develop when information gets trapped within isolated systems, departments, or applications, making integration or sharing difficult throughout the organization. These silos establish substantial obstacles to successful data management and informed decision making. When organizations lack a strong data architecture framework, they encounter data quality problems and restricted accessibility, which generates obstacles that hinder efficiency and organizational growth.

Even with remarkable technological progress, numerous enterprises continue to struggle with challenges that have existed for more than ten years, except now these issues appear on a significantly larger scale. These challenges encompass providing reliable data to customers in the most efficient and dependable manner possible, combining data from various disconnected sources (a task that's already complicated when working with internal systems but becomes dramatically more challenging when adding systems obtained through mergers and acquisitions), and handling data effectively even as traditional data governance approaches frequently turn out to be impractical and transform into obstacles instead of facilitators.

The Emergence of Modern Data Architectures

Modern data architectures constitute flexible, scalable, and efficient frameworks capable of handling expanding data volumes across organizations while satisfying the requirements of today's digitally-focused businesses. Modern data architecture empowers organizations to store, process, and analyze data originating from multiple sources in real time, ensuring it remains accessible across all departments.

The worldwide artificial intelligence software market is projected to expand from $64 billion in 2022 to reach $251 billion by 2027, representing a compound annual growth rate of 31.4%. This encompasses AI platforms, applications, and infrastructure software. This remarkable growth has created the demand for modern data architectures, enabling data processing, governance, and management across diverse platforms to become achievable.

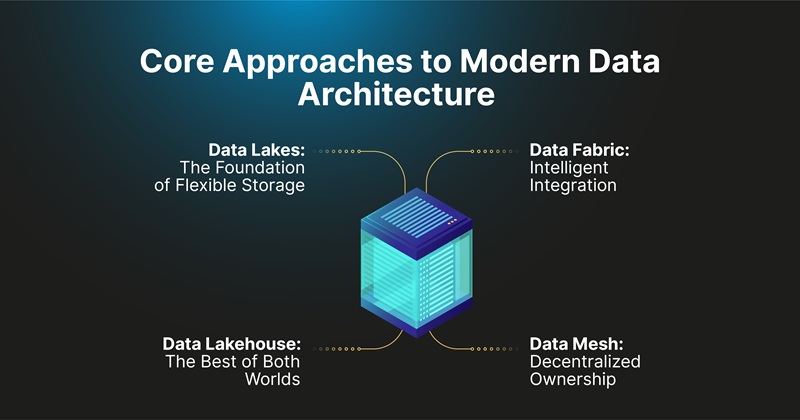

Core Approaches to Modern Data Architecture

Organizations today have several proven approaches to building modern data architectures, each with unique strengths suited to specific business needs.

Data Lakes: The Foundation of Flexible Storage

The term data lake was coined in 2011 by James Dixon, then Chief Technology Officer at Pentaho. A data lake enables analytics at scale by providing a unified repository for all data from various sources that anyone within an organization might need to analyze. Modern implementations store data in cloud based distributed object stores such as Amazon S3 or Azure Data Lake Storage Gen 2, forming the backbone of most large scale data platforms.

Data within a lake is typically organized into several logical zones or layers, an approach recently popularized under the terms medallion or multi hop architecture. Each zone in the lake is designed to group data with similar lifecycles, access patterns, quality, and security requirements. The most common zones include Landing Data Zone, Raw Data Zone, Conformed (Curated) Data Zone, and Transformed/Serving Data Zone.

Data lakes offer significant advantages including cost efficiency through flexible and scalable cloud based storage solutions, elasticity with virtually limitless on demand storage eliminating extensive capacity planning, schema flexibility allowing storage of raw data in whatever form provided by the source, and improved data quality management through the immutable nature of raw data combined with continuous availability.

Data Fabric: Intelligent Integration

Data fabric is the next step in the evolution of data architecture and management tools. It's designed to create more fluidity across different data pipelines and cloud environments, making data securely accessible to end users and facilitating self service data consumption.

Data fabric architecture streamlines end to end integration using intelligent and automated systems that learn from data pipelines. By integrating across various data sources, data scientists can create a holistic view of customers, accessible on one dashboard. The architecture then makes recommendations to better capture the value of data and increase productivity, accelerating the time to value for all data products.

Data fabric leverages continuous analytics over existing, discoverable, and inferred metadata assets to facilitate the design, deployment, and utilization of integrated and reusable data across various environments, including hybrid and multi cloud platforms. The five key pillars include Augmented Data Catalog, Knowledge Graph Enriched with Semantics, Metadata Activation and Recommendation Engine, Data Preparation and Delivery, and Orchestration and DataOps.

Data Lakehouse: The Best of Both Worlds

The Data Lakehouse concept was introduced by the Databricks team and further popularized by Bill Inmon in his book "Building the Data Lakehouse". Today, the idea is widely adopted by major players in data management, including Microsoft, Amazon, Dremio, Starburst, and others.

A data lakehouse architecture enables data access across hybrid cloud from a single point of entry, allowing organizations to unify, curate, and prepare data for AI models. It combines the flexibility of a data lake with the performance and structure of a data warehouse. Most lakehouse solutions have intelligent metadata layers that make it easier to categorize and classify unstructured data.

Data lakehouses help organizations construct price-performant workflows based on a genuine understanding of their data and real business requirements. This enables workflow optimization, which improves costs and performance, and the discovery of hidden connections in the data.

Data Mesh: Decentralized Ownership

Data Mesh is a relatively new concept in data management, introduced as a sociotechnical approach to sharing, accessing, and managing analytical data in complex, large scale environments. According to Gartner, Data Mesh is currently at the peak of inflated expectations, highlighting its growing interest and scrutiny.

Data Mesh represents fundamental shifts across multiple dimensions. The organizational shift moves from centralized data ownership to a decentralized model where data ownership and accountability are pushed back to business domains. The architectural shift proposes a distributed system where data is connected and accessed through standardized protocols. The technological shift treats data as a first class citizen, with data and code considered autonomous, lively units that can evolve independently.

Core design principles include domain oriented decentralized data ownership, data as a product, self-serve data infrastructure, and federated computational governance. However, Data Mesh has a market penetration of only 5% to 20%, and Gartner predicts that it will become obsolete before reaching the plateau of productivity on their 2023 Hype Cycle.

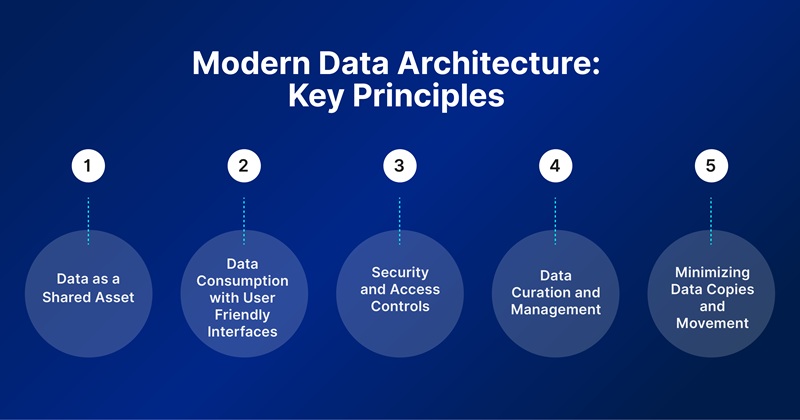

The Guiding Principles of Modern Data Architecture

There are several key principles that ensure data is managed efficiently and effectively in modern architectures.

Data as a Shared Asset

Treating data as a shared asset throughout the company helps break down silos and guarantees that every team has access to correct and timely information. This ensures effective decision making across the company.

Data Consumption with User Friendly Interfaces

Using simple technologies like dashboards to streamline data access lets non technical users easily interact with data, enabling them to gain data driven success without technical expertise.

Security and Access Controls

Data security is prioritized with access controls, encryption, and constant monitoring to protect sensitive information while ensuring that only authorized users can access data accordingly.

Data Curation and Management

Proper data curation ensures that data is clean, relevant, and timely, which is essential for accurate analysis and decision making. Effective data governance frameworks play a crucial role here.

Minimizing Data Copies and Movement

Reducing unnecessary data movement and replication helps optimize performance, lower costs, and maintain data integrity by avoiding discrepancies between different datasets.

Key Components of Modern Data Architecture

There are several critical components that help businesses manage their data effectively and flexibly.

- Data integration helps companies avoid silos and increase efficiency by enabling easy integration of several data systems and tools, guaranteeing smooth data flow across platforms.

- Decentralized governance guarantees that general policies are in place for consistency and compliance, enabling individual teams to control their data.

- Self service capabilities allow teams to access and examine data without largely depending on IT staff, accelerating decision making.

- Data discoverability ensures that data is searchable and accessible to allow teams to use the correct data in the proper timeframe.

- Automation of data processing tasks, including data intake and cleansing, helps lower human labor and accelerate the availability of insights, thereby enabling real time decision making.

- Infrastructure management and CI/CD pipelines are essential for guaranteeing that data solutions are updated and implemented quickly without upsetting current systems.

Challenges in Building Modern Data Architecture

There are several key challenges organizations face during implementation.

Outdated technology limitations continue to present a major barrier, as numerous enterprises remain reliant on antiquated systems that lack compatibility with contemporary data platforms. Integrating these obsolete technologies with current architecture proves both financially demanding and labor-intensive. Data integrity problems remain prevalent, especially when dealing with extensive, fragmented datasets where inaccurate information, redundant entries, and absent data points can diminish overall system performance.

Adapting infrastructure to support sophisticated analytics and artificial intelligence demands specialized equipment and substantial processing power. Organizations lacking cloud-native, expandable resources find it particularly difficult to transform existing data frameworks to accommodate these emerging technologies. Privacy protection and regulatory compliance issues become progressively more intricate when information is distributed across multiple platforms and cloud-based environments.

There are additional challenges including complexity in integrating and adapting to specific business processes while managing multitude of services from different vendors, high costs with building complex data platforms typically involving significant investment often running into millions of dollars, and people and change management as the transition from traditional siloed IT operations to domain agnostic services can be daunting.

Real World Success Stories

There are several examples of organizations successfully implementing modern data architectures.

HR software leader Paycor built a multi-tenant cloud data warehouse in partnership with Informatica and Snowflake, achieving 512% ROI on cloud data warehouse, 90% reduction in AI data prep time, and saving approximately 36.5 work hours annually.

Banco ABC Brasil leveraged Informatica Intelligent Data Management Cloud to power data in Google Cloud Storage and Google BigQuery. With 100% of their data lake catalogued and monitored for data quality, credit approvals accelerated by 70%, 360 degree customer management improved, financial and customer data ingestion sped up 50%, and predictive model design and maintenance times reduced by 60 to 70

TelevisaUnivision built a single, comprehensive cloud native data management platform that scales organically. The organization cut enterprise integration costs by 44%, increased customer service efficiency by 35%, and achieved steady gains in scalability, cost, and resource management.

Conclusion

The journey from data silos to data products represents a fundamental transformation in how organizations manage and leverage their most valuable asset: data. Modern data architectures, whether data lakes, data fabric, data lakehouse, or data mesh, provide the foundation for breaking down barriers, enabling real time insights, and preparing organizations for the AI driven future.

Success requires careful consideration of organizational needs, technical capabilities, and business objectives. By following best practices, understanding available options, and learning from real world implementations, organizations can build modern data architectures that transform isolated data silos into valuable, accessible data products that drive business success and competitive advantage in an increasingly data driven world.